What is a Container?

Containers allow you to easily package an application's code, configurations, and dependencies into easy to use building blocks that deliver environmental consistency, operational efficiency, developer productivity, and version control. Containers can help ensure that applications deploy quickly, reliably, and consistently regardless of deployment environment.

Why Use Containers?

Speed

Launching a container with a new release of code can be done without significant deployment overhead. Operational speed is improved, because code built in a container on a developer’s local machine can be easily moved to a test server by simply moving the container. At build time, this container can be linked to other containers required to run the application stack.

Dependency Control & Improved Pipeline

A Docker container image is a point in time capture of an application's code and dependencies. This allows an engineering organization to create a standard pipeline for the application life cycle. For example:

- Developers build and run the container locally.

- Continuous integration server runs the same container and executes integration tests against it to make sure it passes expectations.

- The same container is shipped to a staging environment where its runtime behavior can be checked using load tests or manual QA.

- The same container is shipped to production.

Being able to build, test, ship, and run the exact same container through all stages of the integration and deployment pipeline makes delivering a high quality, reliable application considerably easier.

Density & Resource Efficiency

Containers facilitate enhanced resource efficiency by allowing multiple heterogeneous processes to run on a single system. Resource efficiency is a natural result of the isolation and allocation techniques that containers use. Containers can be restricted to consume certain amounts of a host's CPU and memory. By understanding what resources a container needs and what resources are available from the underlying host server, you can right-size the compute resources you use with smaller hosts or increase the density of processes running on a single large host, increasing availability and optimizing resource consumption.

Flexibility

The flexibility of Docker containers is based on their portability, ease of deployment, and small size. In contrast to the installation and configuration required on a VM, packaging services inside of containers allows them to be easily moved between hosts, isolated from failure of other adjacent services, and protected from errant patches or software upgrades on the host system.

Who is behind Docker?

- Docker is an open source project managed by Docker, Inc.

- As the project site states: "Docker is a platform for developers to develop, ship, and run applications."

- Originally released in March, 2013 by dotCloud Inc. (became Docker, Inc. in October, 2013)

- Announced Docker 1.0 in June, 2014

- Alliances with Red Hat (RHEL Atomic and OpenShift) and other OS developers: CoreOS, SmartOS (open solaris)

- Alliances with Google, Amazon, and cloud providers to make sure Docker will run in those cloud environments.

Delivering Applications with Docker

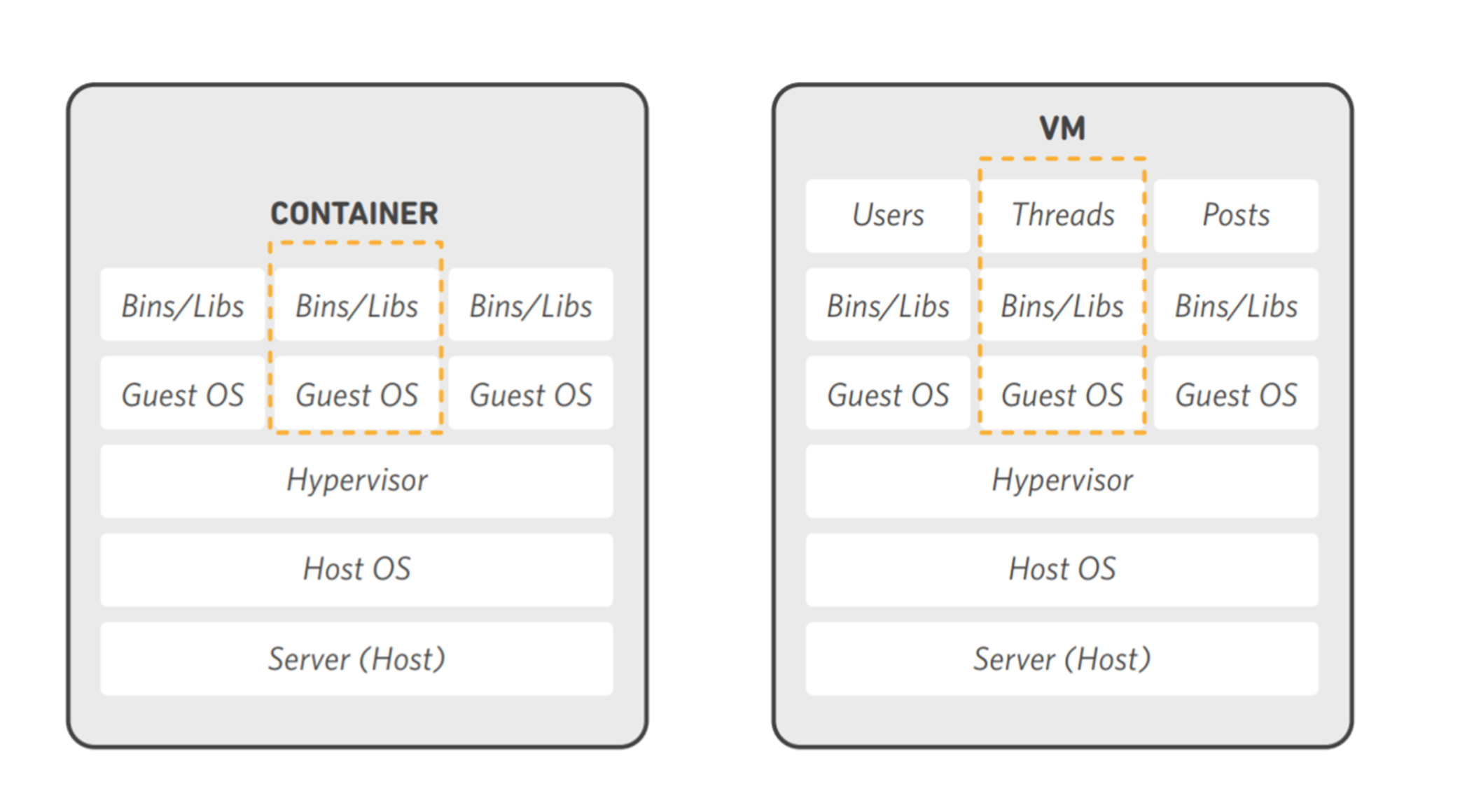

Applications have evolved from running directly on hardware to virtual machines to containers...to FaaS (functions as a service, in containers)

- Containers are:

- Lighter than VMs

- More flexible and secure than running directly on metal

- Containers bring their dependencies with them

- Different environments can deploy containers: Linux, macOS, Windows, Clouds

- Full-blown linux distributions

- Lean operating systems built for containers: CoreOS, Atomic, SmartOS

- Lighter than VMs

- More flexible and secure than running directly on metal

- Full-blown linux distributions

- Lean operating systems built for containers: CoreOS, Atomic, SmartOS